Scaling DeFi: Layer One

Originally published on the 0x Labs Blog.

A visual tour of Ethereum's scaling challenge.

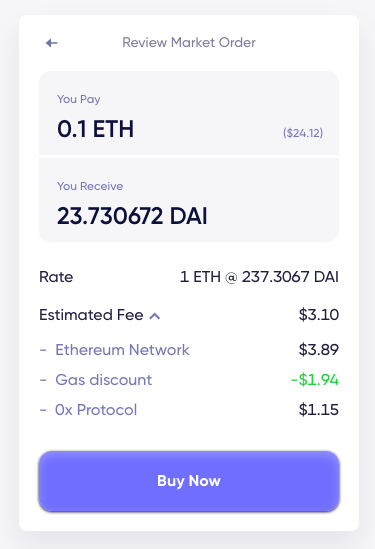

Small trades can end up paying more than 10% in fees.

If you have used DeFi recently, you have experienced sticker shock from transaction fees. It is pretty normal nowadays to pay tens to hundreds of dollars in fees for an Ethereum transaction. At these prices only whales can trade profitably and you can forget about lofty goals like banking-the-unbanked or permissionless financial infrastructure for everyone. Ethereum is becoming a place for the rich.

The high fees are only a symptom of the larger blockchain scalability problem, a problem so notorious it has it's own Wikipedia page. It is the biggest limitation of current blockchains, but there are more limitations such as long finality times, susceptibility to front-running, cross-chain interoperability, etc.

We want to create a tokenized world where all value can flow freely and blockchain limitations are not helping our mission. This is why 0x Labs has a team of research engineers developing solutions to tackle those limitations. In this post we will explore what Ethereum's limits look like and how it affects you, the DeFi user. We will also briefly cover next generation blockchains. In follow up posts we will explore a different class of solutions, layer two, and present our own strategy to serve the needs of DeFi.

To start, remember that Ethereum transactions have different sizes measured in gas. Transactions are collected in blocks which are created about every 13 seconds. Each block has room for a limited amount of transactions, known as the gas limit. Right now, each block has room for about 12 million gas worth of transactions. A plain ERC-20 token transfer takes about fifty thousand gas. This means blocks can contain at most 240 token transfers, or about 18 transactions per second. DeFi transactions often involve multiple token transfers and other bookkeeping which multiplies the cost and further limits the throughput. The gas limit and block time mean there is a constant supply of gas available for transactions.

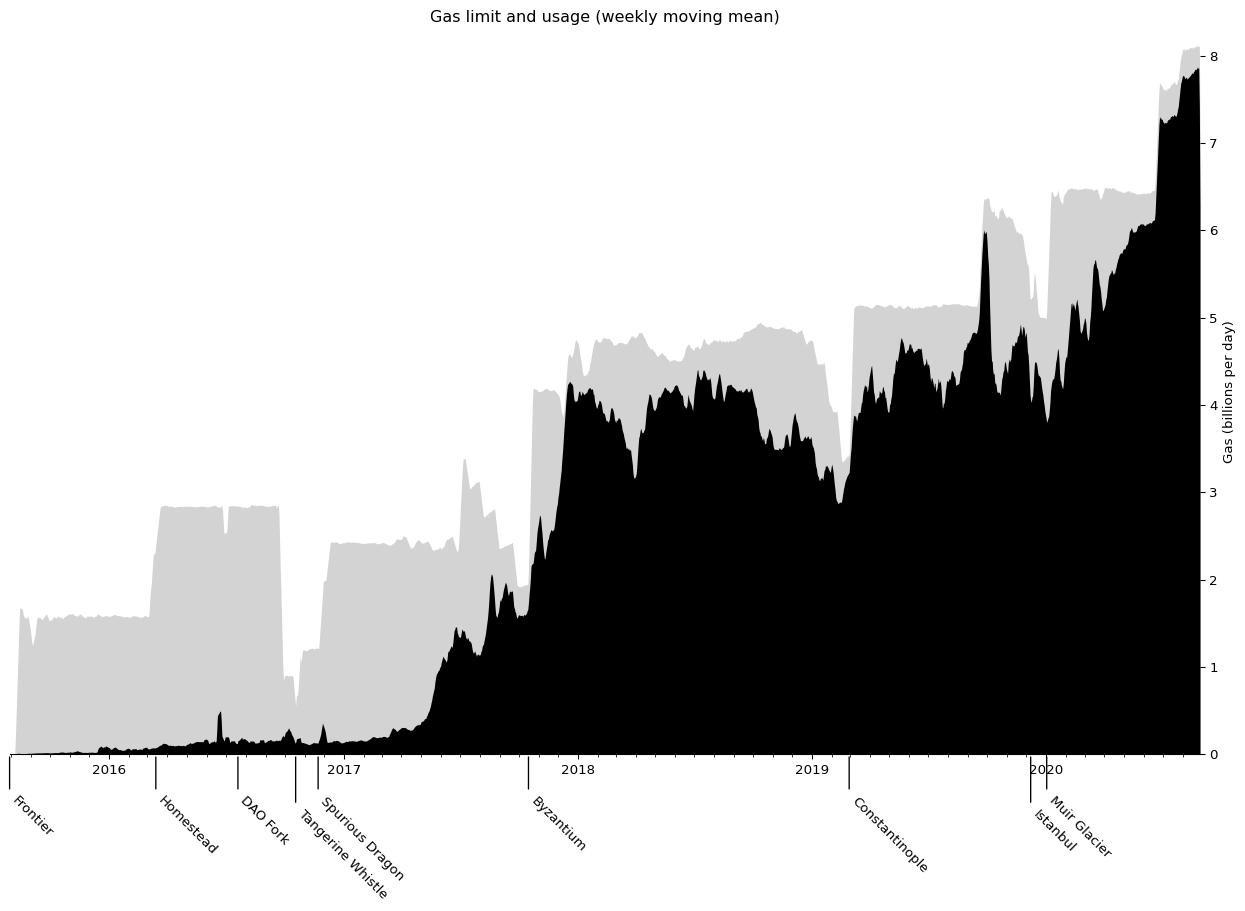

Let's start by looking at how the gas supply and it's usage have grown over Ethereum's history.

Gas Usage

Each day about six thousand blocks are mined, creating room for a few billion gas worth of transactions. This amount has been changing and growing over time, mostly due to increases in the gas limit. Meanwhile the total gas consumed by transactions has also been growing as Ethereum get more and larger transactions.

Looking back at the entire history of Ethereum the supply (gray) and consumption (black) of gas looks like this:

Right before the Byzantium, Constantinople and Muir Glacier hardforks, there are jagged crashes in the gas supply. These are the effects of Ethereum's Difficulty Bomb, also known as Ice Age. During an Ice Age, the block time increases exponentially causing fewer blocks to be mined in a day and thus a lower total daily gas supply. This is of course very undesirable and forces the network to adopt a hardfork to fix it. And that's exactly what it is for: it prevents innovation stagnation by forcing hard forks which bring many improvements. The Istanbul hardfork forgot to reset the bomb, so was quickly followed by Muir Glacier. The upcoming Berlin hardfork is considering changing this mechanism (EIP 2515).

Looking at the usage (in black) Ethereum has been running above 60% capacity since the ICO craze started in 2017. Since then the gas limit has increased fourfold in distinct jumps, each time followed by a commensurate increase in gas usage. For the last few months Ethereum seems stuck at 95% usage.

To understand why Ethereum can't go over 95% usage, we need to look at empty and ommer blocks.

Empty and Ommer blocks

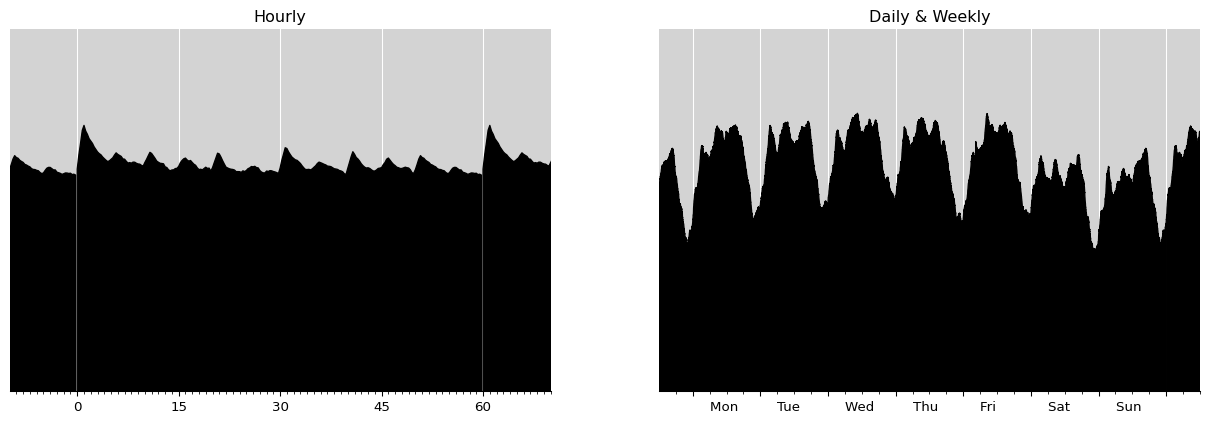

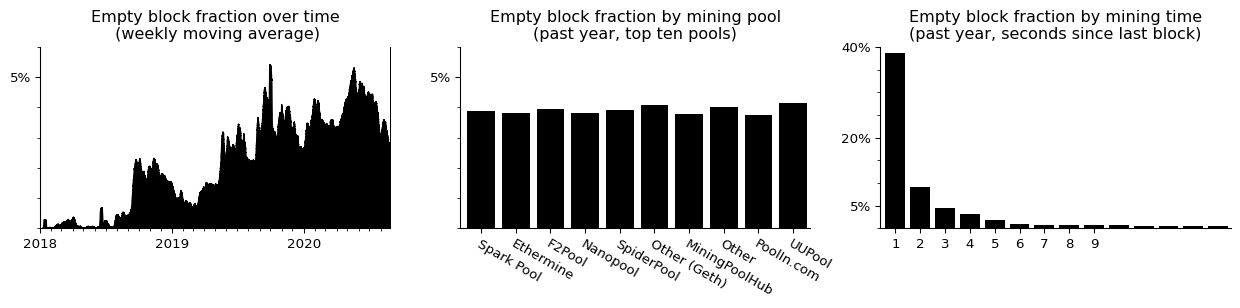

Throughout it's history, Ethereum never uses more than about 95% of the limit despite demand being there. Surprisingly this remaining 5% is wasted in blocks that are completely empty. These empty blocks happen regularly, about once every twenty blocks. Why would someone mine empty blocks when there are transactions that will pay for inclusion? Let's look at the data:

The rate of empty blocks is steadily increasing over time, and currently at about 5%. All the mining pools contribute to it equally, so it's not a malicious miner. Instead the real causes seems to be fast blocks. If the time to mine a block was less than 6 seconds, the chances of it being empty go up exponentially.

One explanation is that miners start mining the next block the moment they receive a new block header, but before they have processed the whole block. This trick, known as SPV mining in Bitcoin, helps miners get started on the next block immediately, but it can only produce empty blocks. Once the new block is fully processed, the miner can assemble a the next full block and switch to mining that one. Further evidence for this explanation is the empty block rate reduces by 25% if the same miner finds two blocks in quick succession.

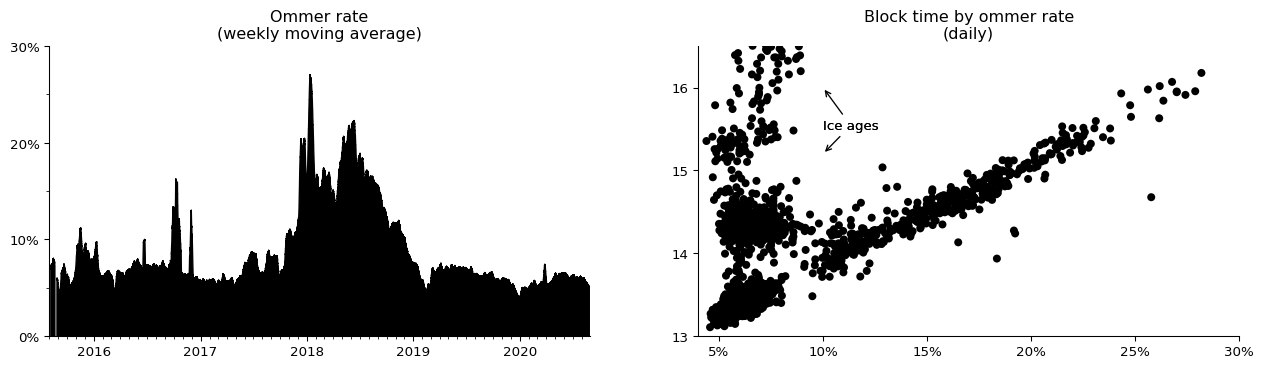

The alternative to mining empty blocks is to keep mining on the previous block while you are processing the new one. This can cause multiple next blocks to be mined. If this happens Ethereum will pick a canonical block and remember the others as ommers. Miners still get a small reward for the ommers. This happens at a steady rate:

The ommer rate peaked during the 2018 usage spike, but has been decreasing to a stable rate of 5% of all blocks mined. This coincides with the increase in the empty block rate as miners likely switched strategies around this time.

It is not immediately obvious that the ommer rate hurts Ethereum scaling but it does. Since EIP-100 in Byzantium the difficulty adjustment maintains a constant rate of canonical and ommer blocks. So a high ommer rate means more blocks are wasted on ommers and fewer grow the chain. This shows up as an increase in block times and thus less total gas available per day. (The other major cause for increased block times being ice ages.)

Whether it's ommers or empty blocks, these are important network health signals for Ethereum. An increase in either means that less daily total gas is available for transactions. Analysis of the ommer rate is the big part of the research backing EIPs 2028 and 1559 (see 1, 2, 3). It is surprising though that neither mentions the empty block rate and that the research has methodological flaws. It would be preferable to have a more rigourous analysis considering both ommer and empty rate using appropriate statistical methods like logistic regression.

There are ways to lower the ommer and empty rate. The presumed root cause is mining pools not having the latest state available due to network and processing delays. One simple but undesirable solution is to centralizing mining pools more so that latest state is in one place. Slightly more decentralized solutions like bloxroute are creating dedicated interconnects between pools. An idea inspired by 'spy mining' would be for pools to pre-share the block that they are currently trying to mine. Other pools would prepare next blocks for each eventuality and once a pool successfully mines its block, they already know which block they want to mine on next and can switch in an instant. Going higher up the stack, improvements in node communication protocols and processing algorithms would help too, there is likely still some room for gains here. But in the end, lowering the ommer and empty rate by itself will improve the daily gas supply by at most about the 5% we saw.

So it seems there is a 95% gas limit, but what happens if people want to use more that the 95%?

Gas Prices

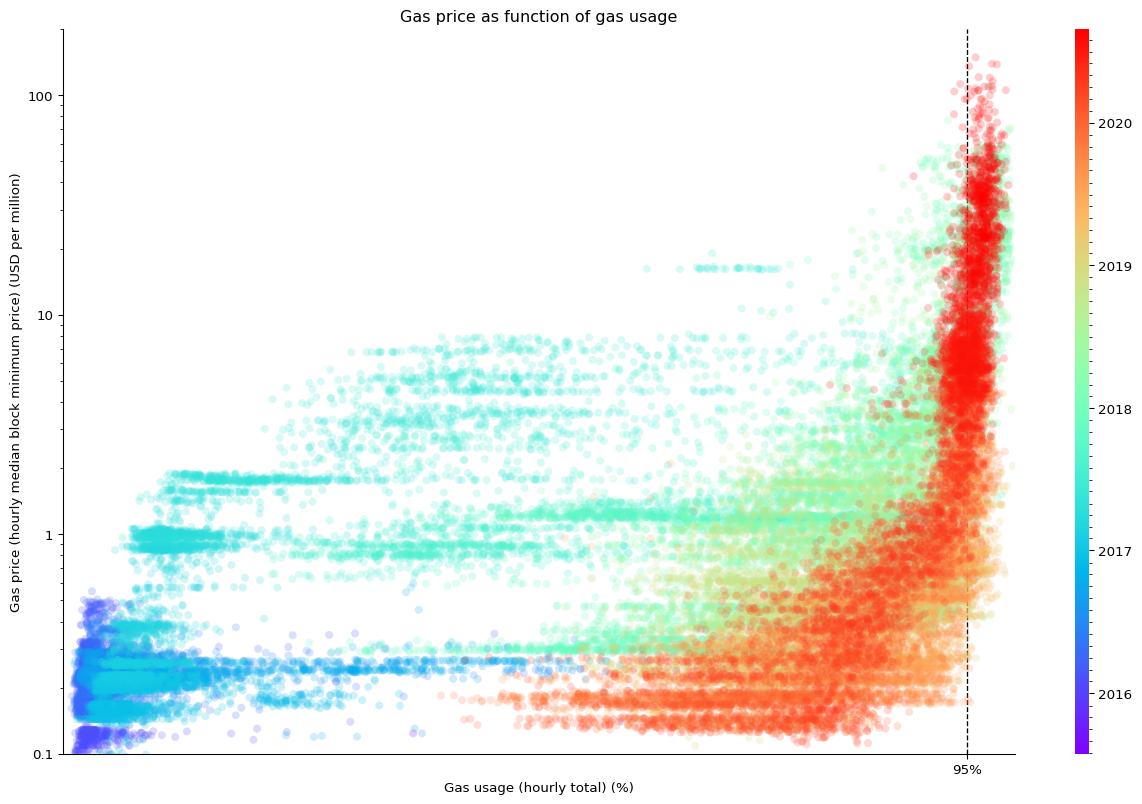

What happens when Ethereum gets close to the gas limit? Miners are free to select transactions as they desire (more about that later), but in practice they process them in order of highest-gasprice-first as this (approximately) maximizes their profit. The net effect is a first price auction on the available gas.

The gas price has become a textbook example of perfectly inelastic supply. It starts to noticeably increase as the network goes beyond 80% utilization and takes over vertically at the 95% mark. Any potential further growth in demand will only increase the prices until demand is discouraged back to the same level. The only way for prices to go down is to raise the gas supply or decrease the demand and the recent increase in gas limit was not enough to meaningfully lower gas prices.

At first glance, any further interest in Ethereum will just raise the prices and not lead to more usage. In reality the lower value usage will be crowed out by higher value usage, there will be fewer trades of cheap game NFTs and more large DeFi trades.

Proposal EIP 1559 aims to make the gas supply more elastic on short timescales. During periods of high demand larger blocks can be created (up to 20 million gas). This should help smooth out the peaks in gas price and let transactions be included sooner. What it won't do however is change the long term supply inelasticity. Under EIP-1559 there will still be a long term fixed rate of gas, meaning the price will go up until demand is small enough. Under EIP-1559 there is still an incentive to pay a premium for being processed first within a block (assuming pools continue to author blocks in that order). This means front-running, gas-bidding-wars and miner extraction of value will continue to be a thing.

The numbers in the graph represent the minimal price to be included in the next block. If you are willing to wait longer the gas prices can be much lower. Historical data suggest a decent drop-off if you are willing to wait two minutes or longer. EIP-1559 should help to reduce the premium for faster processing.

So gas prices go up when we hit the gas limit, how do we raise this limit?

Gas Limit

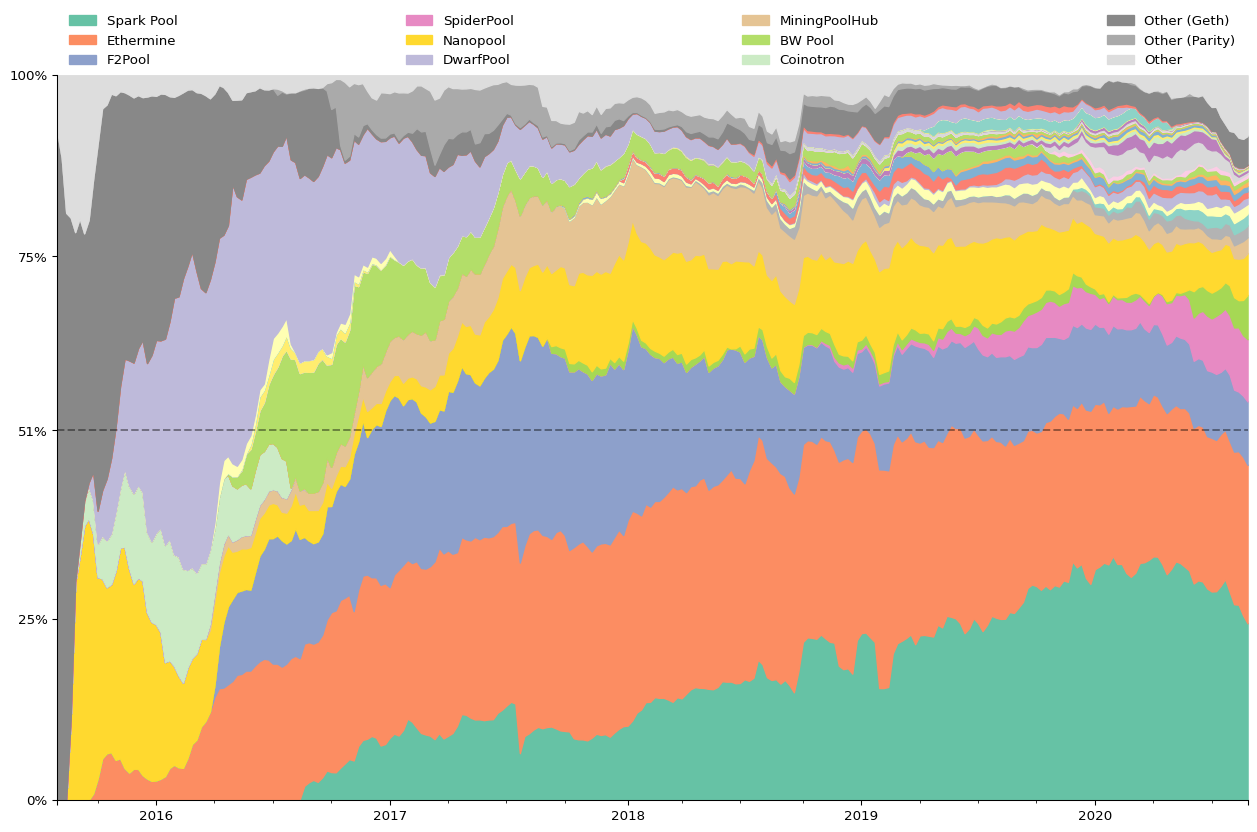

The mining pools decide the gas limit. Let's quickly recap how miners and pools work: Nearly all miners pool their resources. Instead of all trying to find the next block and risk going for long times without any payout, they pool their resources and get a steady income stream. This is facilitated by mining pools that verify each miners contributions and author the next block to work on. The larger pools end up authoring a large fraction of all the blocks. Let's look at how these shares developed in Ethereum's history:

Nowadays, Sparkpool, Ethermine and F2Pool together authored the majority of the blocks.

Besides participating in hard forks, pool operators have one important governance responsibility: they set Ethereum's gas limit. Unlike block time and gasprice (which are emergent properties) the gas limit is actively decided on for each block. The new gas limit is constraint to be within 0.1% from the previous blocks, so each block can only make a small nudge up or down (yellow paper eq. 47). If they all agree the nudges quickly compound so that in 2.5 hours the gas limit can double or halve. If there is no agreement the gas limit becomes the median weighted by pool size.

Currently , due to a lack of clear information about how miners will behave in reality, we are going with a fairly simple approach: a voting system. […] The hope is that in the future we will be able to soft-fork this into a more precise algorithm.

— Ethereum Design rationale (first added 2015-03)

The way miners set the gas limit is a stop-gap solution from the early days of Ethereum. As with many stop-gap solutions that are good enough, the stop-gap became semi-permanent. EIP 1559 proposes a different mechanism and is currently discussed for the Berlin hardfork. Until then, the pool operator can govern the gas supply much like OPEC governs oil production:

The #Ethereum miners are voting to increase the Block Gas Limit from 10,000,000 to 12,500,000.

— @etherchain_org (Ethermine pool operator)

Recently the two largest mining pools made the somewhat controversial decision to raise the daily gas production by 25%. The goal is to provide much needed relieve from the high transaction fees by increasing the gas supply. From what we seen so far the demand for transactions grows faster than the gas limit can be increased, which means prices might get a temporary relief, but will eventually go up again.

TL;DR: The #Ethereum miners don't give a fuck about the long term health of the network nor about DoS attacks.

Raising the gas limit comes with a big hidden cost on Ethereum's security. As we have seen, the gas limit can increase the ommer and empty block rate. This increase is small under normal transaction load. But for security we are not interested in normal behavior, only in worst-case adversarial behavior. Perez & Livshits (2019) study this worse case and generated transactions that are 100 times slower to process than normal ones for the same gas cost. If you fill a block with such transactions it would take a node 90 second to process it, causing nodes to fall behind and pools to miner ommers and empty blocks. Since publication there have been some mitigations, but it is far from solved. This caused two leading node developers Péter Szilágyi and Alexey Akhunov to criticize the decision to raise the gas limit.

So gas prices go up when we hit the gas limit, and it looks like we shouldn't increase the gas limit much further. What else can we do? Can we maybe lower the gas cost of transactions?

Gas Cost

The gas cost of a transaction is made up mostly by the cost EVM operations. Transactions are made up of many EVM operations and the cost of each operation is governed through EIPs and hard forks. In past hardforks, the gas cost of certain operations have been increased (EIPs 150, 160, 1884) or decreased (EIPs 1108, 2028, 2200). The planned Berlin hardfork also considers a number of gas specific changes (EIPs 1380, 1559, 2046, 2565, 2537).

The goal of all these changes is to make the fees more accurately reflect the true cost of the operations. This means the cost of computing operations will go down as computers and algorithms become faster. The cost of storage operations is a different story. The cost of storage and lookups depends and the size of the chain state, and the size of Ethereum's state keeps growing. This growth is not offset by improvements in storage devices or databases.

This means storage will continue to be a big cost in DeFi. Creating a new balance costs 20 thousand gas and modifying an existing one 5 thousand. A transfer modifies at least two balances, an exchange at least four and more complex DeFi transactions can involve many more costly pieces of state. There seems to be no easy way to reduce the amount of storage involved and the cost of it is looking to go up if anything. On the bright side layer two scaling solution, which tend to be storage light and compute heavy, will look more favorable.

Finally the same security concerns apply as with raising the gas limit: it's the worst case that matters. Naively optimizing the gas cost for the current true average cost of operations is very dangerous.

By now it is clear why scaling Ethereum is such a thorny problem. Before we talk about proposed solutions, we want to digress into one more limitation of current Ethereum that hurts DeFi users.

Miner extractable value

Block authors are bound by the consensus rules which provide some important freedoms such as transaction selection and ordering. For regular token transfers this does not matter much, but for DeFi transactions like exchange trades there is a significant economic value in front-running trades. More complicated exploits can be considered where a target transaction is sandwiched between two attacking ones. Daian et al. (2019) call this miner extractable value.

It does not appear that pools are maliciously using their transaction-ordering freedom, but they still benefit from it as if they did. Pools likely use Geth which orders transaction by gas price (see 1, 2). This creates a gas-price auction, the highest paying transaction goes in first. This has the unfortunate effect that anyone can front-run a trade by bidding higher. Competitive traders keep bidding the gas price up until the trade profit is entirely consumed by the gas fee. At this point all the exploitable value ends up as transactions fees in the miners pockets.

In other situations there is value in being right after another transaction, for example to be the first to liquidate a position after a price oracle update. This is called back-running and also ends up profiting the miners.

The miner extractable value is ultimately extracted from regular DeFi users through bigger spreads, worse prices, higher fees and more failed transactions. For a great DeFi experience this should be addressed. The way to solve it is by restricting the transaction ordering freedom, for example by requiring transactions in a block to be ordered lowest-gas-price first.

Ok, so now we have a good overview of Ethereum's limitations and how they affect DeFi. Surely all the rock-star teams working on scaling are going to solve it, right?

Layer One, Version Two

There are many very impressive teams working on different solutions to the scaling problem. The solutions come in two flavors, layer one and layer two. Layer one solutions aim to build a more scalable alternative to Ethereum, layer two solutions build more scalable infrastructure on top of Ethereum. Let's start with layer one and leave layer two for the next post.

Let's start with the obvious: improving the performance of existing Ethereum. This is essential what the Eth1x effort is doing. By improving the performance of Ethereum clients there is still a lot that can be done. Unfortunately Eth1x doesn't get nearly the support it deserves, so progress is slow. To get an idea of the performance that can be achieved this way we only have to look at Solana, which achieves over a thousand times the throughput of Ethereum with room to grow. The main downside of this approach is that it requires sophisticated hardware to run a full node.

Most of the other solutions have three things in common: the use of WebAssembly as the virtual machine, architectures with minimal state, and most importantly, sharding. Current Ethereum executes all transactions in sequence. In fact, putting transactions in a sequence is arguably the whole point of a blockchain. The downside of this model is that it is hard to do in parallel, so we can not solve scaling easily by throwing more resources at it. This is where next generation blockchains like Eth2.0 come in. By changing the way transactions are executed so they can be handled in parallel. This is done by splitting the blockchain in multiple loosely connected domains, a process called sharding. Within a shard things can still happen sequentially but between shard they occur asynchronously. This allows all shard to run in parallel, scaling the network by roughly the number of shards. The domains we use for splitting also don't have to correspond with shards, we can have multiple domains assigned to the same shard, or even move them around to get load balancing. See Near protocol's Nighshade paper for a more in-depth overview of sharding.

Exactly where and how the chain is split in domains depends on the particular next generation chain. They can be thought of on a spectrum from fine-grained (many tiny domains) to coarse grained (few large domains).

fine parallelization <---------------------------------> coarse parallelization

DFinity Eth 2.0 Polkadot

actors (TBD. Contracts?) chains

Two projects conveniently span the spectrum of granularity. DFinity is on the fine-grained end, where each actor is its own little domain and every actor interaction is asynchronous. A bit less fine-grained is Near protocol where each contract is its own domain. On the coarse-grained end is PolkaDot, where the domain is an entire shard, in this context more accurately called a parachain. It's to early to tell where Ethereum 2.0 will land from a Dapp developer perspective. The Eth1EE (the post-Eth2 future of Eth1) will be coarse and have the boundaries coincident with shards with current Ethereum becoming a single shard. A dedicated Eth2EE may go for a more fine-grained solution. The advantage of fine grained solutions is that they are transparent, they can make all inter-contract calls look the same, wether they cross shard boundaries or not. This in turn allows easy load balancing by moving contracts between shards.

The downside is that domain-crossing transactions are no longer atomic, instead they become concurrent and partially irreversible. In DFinity and Near this shows up as inter-contract calls being async and returning a promise that you need to await. During an await, all that has happened so far will get committed to the chain. And then other peoples transactions can get piled on top of it. At this point you can not revert what has happened so far. When the await finally resolves it can return return success or failure from the contract call. There are various proposals to avoid this and get some form of atomicity back across shards, but they have their own downsides. Embracing the non-atomicity looks like the natural outcome.

For DeFi an asynchronous transferFrom call poses quite a challenge. Imagine a simple exchange between two parties, Alice and Bob want to exchange Eth for Dai. The basic contract would look like

await eth.transferFrom(alice, bob, 1)

await dai.transferFrom(bob, alice, 300)

but now we need to handle the errors. If the first transaction fails, we can simp ly stop the transactions. But if the second transaction fails, we need to give Alice back the 1 Eth. The problem is, Bob may have already spend it by the time we get there. One way to solve this is using an escrow

result = await eth.transferFrom(alice, escrow, 1)

if result is failed:

return "Transaction failed: Alice can not pay"

result = await dai.transferFrom(bob, alice, 300)

if result is failed:

result = await eth.transferFrom(escrow, alice, 1) # This can never fail.

return "Transaction failed: Bob can not pay"

await eth.transferFrom(escrow, bob, 1) # This can never fail.

Good, no-one is losing tokens anymore. But now Bob has a free exclusive option on Alice's offer. While Alice's tokens are being held in escrow they are not available for other trades and it is not yet guaranteed that the transaction with Bob will succeed. You could solve this by penalizing bad behavior, but its hard to determine appropriate penalties as DeFi transactions can be very valuable. You could also solve it by requiring all market participants to have their funds in escrowed from the beginning in a single deposit contract, but this centralizes state again and essentially undoes the sharding.

The other thing to note is how tricky these concurrency issues can be. In a real exchange there is also the fill-state of the order that needs to be updated, which further complicates the protocol. The reentrancy bugs that have plagued Ethereum 1.0 are just butterflies compared to the bed bug infestation that is concurrency bugs. Concurrency bugs are non-deterministic and never seem to happen during testing. As we saw in the simple exchange, they require rethinking the architecture from the ground up because like bed bugs, the only reliable way to get rid of them is to tear everything down and rebuild it from the ground up.

Exchange is a foundation that the rest of DeFi builds on, and it is a notoriously serial process. We've seen how orderbook based exchanges pose a challenge. Automated market maker exchanges are simpler because they already have reserve funds in escrow, but in there the reserve balances themselves form the bottleneck preventing parallelization. Even in the fastest traditional exchanges the settlements are ultimately serialized on a single matching engine without concurrency (though hopefully with redundancy, for an deep dive on how traditional exchange works, Brian Nigito's talk is excellent.

That doesn't mean these problems are impossible to solve. The simplest solution would be for all these protocols to deploy independent instances on each shards and have arbitrageurs keep them in sync. Or maybe we can get a a single synchronous shard to be performant enough to contain all of DeFi and we can stop worrying about concurrency.

In this post, we went through into the details of Ethereum's limitations when it comes to scaling DeFi. As we have seen, it's a complex problem that has no easy solution. In the following posts, we will go through a specific class of solutions (L2) and present 0x's own strategy.

References

- Daniel Perez & Benjamin Livshits (2019). "Broken Metre: Attacking Resource Metering in EVM."

- Daian et al. (2019). "Flash Boys 2.0: Frontrunning, Transaction Reordering, and Consensus Instability in Decentralized Exchanges."

- Brian Nigito (2017). "How to Build an Exchange."

- Danny Ryan (2020). "The State of Eth2, June 2020."

- Scott Shapiro & William Villanueva (2020). “ETH 2 Phase 2 WIKI.”

- Near Protocol sharding design